With the rise of artificial intelligence (AI) applications, the need to store, index, and search vastamounts of data efficiently has become more pressing than ever. In domains like personalizedsearch,recommendation engines, chatbots, and generative AI, retrieving relevant data in realtime is essential

These applications rely heavily onvector embeddings, mathematical representations of data,to capture the meaning or relationship between differenttypes of content such as text, images,audio, or video. However, as the size and complexity of these datasets grow, traditional searchmethods struggle to deliver the required performance and scalability.

Many AI systems useApproximate Nearest Neighbor Search (ANNS)algorithms to quicklyidentify relevant data points by finding the closest matches in a high-dimensional vector space.However, while effective, popular vector search techniques likeHNSWorScaNNfacesignificantchallenges atscale. Theseincludehigh memory consumption, slower performancewith updates, and increased costs.The need to rebuild vector indices periodically to maintainsearch accuracy often disrupts operations and consumes resources,underscoring the need fora more efficientandcost-effectivesolution like DiskANN.

IntroducingMicrosoft DiskANN(Disk-based Approximate Nearest Neighbor Search),arobustsolutionthateffectivelyaddressesthe challenges of traditionalvector search methods. With itsbalanced approach tospeed, accuracy, and memory efficiency, DiskANNprovides amuch-neededreliefto AI developers and data scientists.Its integration withAzure Cosmos DBfurtherenhancesitspower, making it asecure andreliablesolution for organizationsdealingwithmassiveamounts of vectors whilekeeping operational costsin check.T

he integration of DiskANN with Azure Cosmos DBis agame-changer intermsofscalability. Itcombines the strengths of both systems, offeringfast, low-latency search with a reliable,scalable, anddistributed database engine. This innovation allows developers to build intelligentapplications thatscale effortlessly, managereal-time updateswithout compromisingaccuracy, and ensurehigh availability.

It’sasecure investmentfor businesses,future-proofingtheir AI solutionsand providing peace ofmind about their long-term viability.

DiskANN and Azure Cosmos DB Integration

The integration ofDiskANNintoAzure Cosmos DBmarks a significant leap forward in howvector search is managed at scale.DiskANN is agraph-based vector indexingsystemthatefficiently handlesvast datasets with minimal memory usage. Unlike traditional vector searchalgorithms that depend heavily onlargememory operations, DiskANN leveragessolid-statedrives (SSDs)to store large portions of data, maintaining only the essential, quantized vectors in memory. This innovative design ensures that the system remains fast, responsive, and cost-effective even when working with billions of data points.

How DiskANN Works

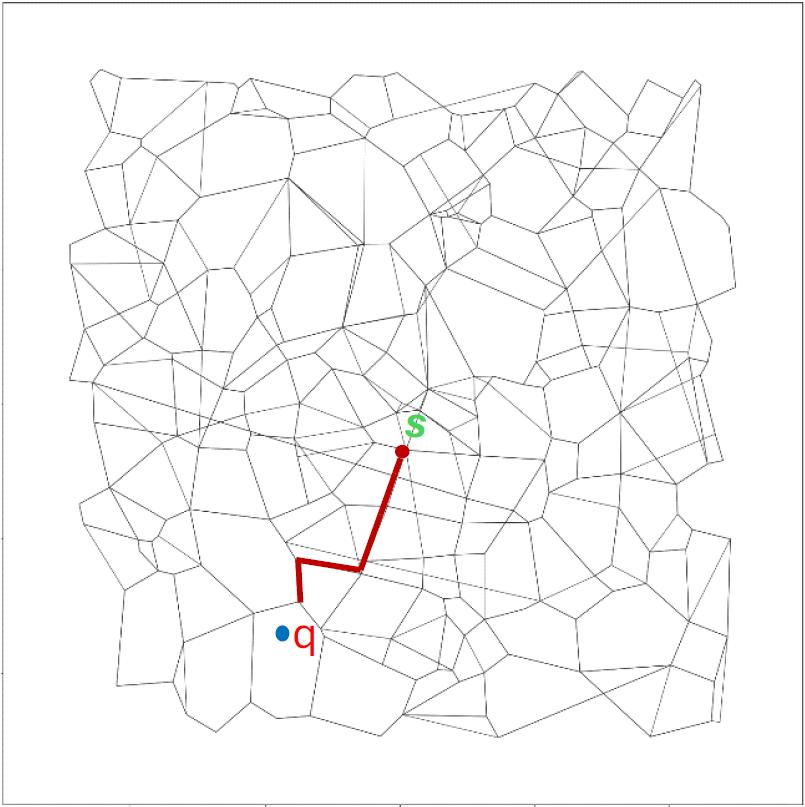

DiskANN utilizes agreedy search algorithmover agraph-based index. This method involves starting at adesignated entry point in the graph and traversingtoward the target vector by following the nearestneighbors. The graph structure ensures that mostqueries converge to the desired result in just a fewhops, reducing the need for excessive disk I/Ooperations. By quantizing vectors—storing compressedversions for most searches and accessing full-precisionvectors only when necessary—DiskANN achieves ahighthroughput-to-latency tradeoffwithoutcompromising accuracy.

Why Azure Cosmos DB?

With its planet-scale distributed architecture and multi-region support, Azure Cosmos DBoffersthe perfect platform to host DiskANN. Cosmos DB provides automaticsharding andpartitioning, ensuring that large datasets are seamlessly distributed across nodes. Thisscalability allows applications to efficientlyingest, store, and query billions of vectors.Additionally, Azure Cosmos DB offers99.999% availabilitythrough multi-region replication andfailover mechanisms, minimizing the risk of downtime.

Another key feature isCosmos DB’sserverless and autoscaling capabilities, whichdynamically adjust compute resources based on traffic demands. This ensures that applicationsremain responsive during peak loads while saving costs during idle times. Integrating DiskANNwithin Cosmos DB’s architecture allows vector search to benefit from these scaling featureswithout additional effort from developers.

Seamless QueryCapabilitie

Developers can interact with the integrated DiskANN-powered vector search usingfamiliarSQL-like queriesprovided by Cosmos DB. The search engine supportsfiltered queries,combining vector search with traditional database predicates, such asfiltering by time ranges,categories, or user-specific metadata. These queries benefit from Cosmos DB’s multitenancysupport, enabling different applications or user groups to perform isolated searches on thesamedataset without performance degradation.

Performance and Cost-Efficiency of DiskANN in Azure Cosmos DB

DiskANN’s integration into Azure Cosmos DBis a thoughtful approach to address thegrowing demand for scalable and cost-efficient vector search solutions. It bridges the gapbetween operationaldatabases and specialized vector search systems by leveraginggraph-based indexing with SSD-backed storage. The result is a system that offershighthroughput and minimal latency, even with billions of vectors.

Performance Benchmarks

In benchmarking testshighlighted in the results published, DiskANN demonstrates superiorperformance compared to other industry standards likeHNSWandScaNN. Specifically,DiskANN achieves:

- Up to 5x throughput improvementover in-memory ScaNN on large datasets.

- Customer service automation AI diagno stic tools deployed on handheld medical devices , eliminating reliance on cloud servers.

- Recommendation engines Predictive maintenance models running directly on factory equipment , ensuring seamless operations with minimal downtime.

What stands out here is the dual capability:In-memory modeensures blazing-fast responseswhen performance is critical, whileSSD-based modeallows for substantial cost savings byreducing memory requirements, which becomes crucial when scaling to billions of vectors. Thisflexibility is particularly beneficial for enterprises balancingspeed, accuracy, and budget

Real-Time Updates and Incremental Indexing

A common problem with many vector search engines is the inability tohandle real-timeupdates without degrading performance. Many systems require complete re-indexing after acertain threshold of updates, which is operationally expensive. DiskANN, however, introducesincremental updates, allowing new data points to be seamlessly added without the need torebuild the entire index. This is a crucial advantage for AI systems that deal withfrequentupdates(e.g., recommendation engines or personalized search).

Additionally, DiskANN supportsintelligent caching strategies, storing frequently accessedgraph nodes in memory. This ensures a consistent trade-off between memory usage and querylatency, making the systemadaptive to high-throughput and low-cost scenarios.

Cost-Efficiency with Autoscale and Serverless Models

Oneof the standout features from our review is howAzure Cosmos DB’s autoscalecapabilitiesalign perfectly with DiskANN’s design. Traditional vector databases often facechallenges in optimizing costs when scaling up. However,Cosmos DB’s ability toautomatically adjust throughputbased on traffic ensures that resources are used efficiently.During periods of low demand, the system scales down, minimizing operational costs withoutcompromising availability.

Theserverless optionfurther adds flexibility. Developers can start small without needing toprovision fixed capacity upfront, scaling up as their vector search workloads grow. Thiscost-effective scalabilitymakes the DiskANN-Cosmos DB combinationappealto enterprisesrunningdynamic AI workloads, such as seasonal recommendation engines or chatbots withfluctuating usage.

Use Cases and Practical Applications

The integration ofDiskANN with Azure Cosmos DBopensa wide range of practicalapplications for businesses building AI-powered solutions. From search engines torecommendation systems, the ability to handle large datasets with minimal latency is becomingessential. As an external architect, I see several high-impact areas where this solution can beleveraged effectively.

AI-Powered Search Engines andPersonalized Recommendations

Modern search engines, chatbots, and recommendation systems thrive onretrieval-augmented generation (RAG)models. These systemsmustquicklyfetch relevant informationfromvector embeddingsgeneratedbylarge corpora, such asproduct catalogs, documents, orvideos. DiskANN’s ability to performanapproximate nearest neighbor search (ANNS)efficiently means these systems can serve personalized results inreal-time.

For example, e-commerce platforms can leverage DiskANN-poweredvector search to deliverhighly relevant product recommendations based onuser behavior or past purchases.Similarly,media streaming platformscan use it to recommend content by matching users’preferences with similar embeddings from their content library.

Enterprise AI Solutions with Copilot Integration

The paper mentions that Microsoft has integrated DiskANN into critical products likeMicrosoft365 and Windows Copilot. This suggests the potential for enterprise-level productivity tools toimprove dramatically with vector search capabilities. For instance, organizations can usesemantic search within internal document repositories, allowing employees to retrieveinformation quickly from emails, files, or reports using natural language queries.

Such seamless search capability helpsreducedowntimeand improvedecision-making,especially indata-intensive industrieslike finance, legal services, and healthcare, whereretrieving thecorrect information at the right time is crucial.

Real-Time Recommendations for Advertising Platforms

DiskANN is also relevant forad tech and marketing platformsthatmust make real-timedecisions about which advertisements to serveusers.The platform can recommend relevantads that align with user interests byembedding user profiles, browsing behavior, and contextualdata into vector representations. The ability to handle incremental updates ensures that therecommendations remain fresh and accurate, even with rapidlychanging user behavior.

Best Practices for Developers Using DiskANN in Azure Cosmos DB

- Combine SQL Queries with Vector Search: Developers can leverage Cosmos DB’sSQL-like syntax to combine structured queries with vector search. This allows themtofilter results based on multiple criteria, such as time ranges or specific productcategories.

- Optimize Index Configuration: Depending on workload, developers can choosebetween in-memory mode for ultra-low latency or SSD-backed mode for cost savings.Intelligent caching of graph nodes can also improve performance in high-trafficapplications.

- Use Multi-Region Replication for Global Applications: For applications with a globaluser base, Cosmos DB’smulti-region replicationensures low-latency access and highavailability, regardless of where users are located.

- Monitor Query Performance and Tune Parameters: Performance tuning withDiskANN’s quantization options(e.g., scalar or product quantization) can ensure thatmemory usage stays within limits while maintaining high recall. Monitoring tools availablein Cosmos DB can help developers make necessaryreal-timeadjustments.

Industries Benefiting from DiskANN in Cosmos DB

| Industry | Use Cases |

|---|---|

| Retail | –

Personalized product recommendations – Dynamic pricing and promotions based on real |

| Healthcare | –

Fast

retrieval of patient records – Search through medical research and literature |

| Finance | –

Real

–

time fraud detection using behavioral analysis – Portfolio optimization and recommendations |

| Media & Entertainment | –

Personalized content and playlists – Enhanced search across vast media libraries |

| Enterprise Productivity | –

Knowledge management with fast document search – Seamless retrieval from emails and reports |

| Advertising & Marketing | –

Real

–

time ad targeting based on user profiles – Contextual recommendations for ad campaigns |

Conclusion and Recommendations

As we continue to evaluate theDiskANN integration with Azure Cosmos DB, this solutionpresents acompelling value propositionfor organizations aiming to implementscalablevector searchin AI-powered applications.

It offers a unique blend of advanced indexing algorithms and a robust operationaldatabase, ensuring businesses can handle growing data volumes while maintaining low latency and high availability.However, like any technologyadoption, aligningimplementationwith the organization’s goals, architecture, and operational constraintsis essential.

WhyDoesThis Integration Make Sense?

From our perspective,DiskANN’s graph-based indexing approachis particularly well-suited forenterprises dealing withhigh-dimensional data and frequent updates. Unlike legacy systemsthat struggle with scaling or incur high costs from memory consumption, DiskANN’sSSD-basedarchitecture and quantization techniquesprovide a balanced trade-off betweenperformance and costefficiency.Cosmos DB’s autoscale capabilitiesminimize operationaloverhead, making the solution ideal for fluctuating workloads inretail, finance, and media industries.

Theincremental indexing and multi-region replicationfeatures address common pain pointsaround real-time updates and system reliability. For organizations that demand bothtransactional and vector search capabilities within the same platform, the convergence oftraditional SQL queries with vector search isa significant advantage, reducing the need tomaintain separate systems.

Recommendations for Adoption

Given the strategic nature of this solution,werecommend thattechnology leaders evaluate thealignment of DiskANN and CosmosDB with their long-term AI and data strategy.

Here arethe keyconsiderations:

- Start with a Pilot Deployment:Test the SSD – based mode to explore the balance between memory usage and performance. This approach will provide insights into resource consumpt ion before scaling the solution organization – wide.

- Optimize for Use Case Specificity:C hoose between in – memory or SSD modes based on workload needs . Use in – memory mode for latency – sensitive applications like recommendation engines and SSD mode for cost – se nsitive scenarios like archival searches.

- Leverage Multi – Region Replication for Availability:Enterprises operating across geographies should us e Cosmos DB’s multi – region replication to ensure consistent performance and low – latency access.

- Plan for Continu ous Tuning:Regular performance monitoring is necessary to adjust quantization and caching strategies and ensure the system remains optimized as workloads evolve.

Potential Risks and Mitigation Strategies

Every technology comes with trade-offs. One challenge with this solution isbalancing memoryusage with SSD access. Enterprises must carefully tune caching strategies to avoid bottlenecks in high-throughput scenarios.Additionally, developer expertise in managing SQL-based queries and vector search operations is essential for seamless adoption. Early developertraining and piloting caneffectivelymitigate these risks.

While DiskANN with Azure Cosmos DBoffers powerful features likelow-latency vectorsearch, multi-region replication, and autoscaling, these capabilities come withsignificantoperational costs. Thereliance on SSD-backed storage and frequent autoscaling can drive upexpenses, particularly for applications with large datasets or unpredictableworkloads.Additionally, multi-region deployments, while essential for ensuring global availability, increaseinfrastructure and data transfer costs.

Organizations must carefully monitor resource usage and tune configurations—such as limitingin-memory caching to high-priority queries or selectively enabling autoscale—to avoidoverspending. As such, cost management becomes critical for enterprises aiming to balanceperformance and scalability with budget constraints, ensuring the solution remains efficient andfinancially sustainable.

Final Verdict

In summary, DiskANN with Azure Cosmos DBis an innovative, forward-looking solution well-suited for enterprises aiming toscale AI-powered search and recommendations. However,thesystem’sstrategic deployment and ongoing optimizationare theactualvalue. Organizationsthat invest time in testing, performance tuning, andaligning workloads with business goalswill maximize their ROI from this solution.

This solution is particularly relevantfor businesses operating in highly competitive, data-intensive sectors thatmust deliver personalizedexperiencewhile controlling infrastructurecosts.DiskANN in Cosmos DB offers a path to sustainable, scalable, and efficient AIadoptionfor these enterprises.

Ready to dive deeper into AIand make AI work for your business?Don’t miss our updates, the latest insights, and breakthroughs.

Subscribe to our blognow.