The rise oflarge language models (LLMs)has reshaped many fields, fromnatural languageprocessing (NLP)tochatbotsandautomation platforms. However, these models’growingsize and complexity, such as GPT or LLaMA, come at a steep cost—highcomputationaldemands, energy consumption, and latency. As organizations increasingly rely on thesemodels to build innovative products and services, many leaders and developersface challengesinscalingtheir AI systems sustainably and affordably.

Microsoft’sBitNetframework, released via GitHub, proposes a solution tothese challenges. Itintroduces1-bit quantization, an innovative architecture that encodes neural networkparameters as-1, 0, or 1. Thisreduction in model precisionbrings multiple benefits:smallermemory footprints, faster inference, and lower energycosts. Unlike traditional post-trainingquantization, BitNet applies quantization-aware training from the outset, ensuring the modelmaintains competitive accuracy throughout its use.

For developers and business leaders, BitNet reduces the cost of AI operations, making itpossible to run LLMs efficiently,even on smaller devices or limited cloud infrastructure. Thisapproach unlocks new opportunities to deploy advanced AI applications in environments wheretraditional models would be too costly or inefficient. From real-time chatbots to recommendationengines and edge AI, BitNet empowers organizations to leverage powerful AI without breakingthe bank.

This innovation aligns with Microsoft’s sustainability initiatives andoffers a path forward forcompanies looking to scale AI responsibly. With seamless integration into open-sourceplatforms like HuggingFace and LLaMA, BitNet makes it easier for developers toquicklyadoptand deploy 1-bit LLMs, fostering a new era of cost-efficient AI solutions

In thefollowingsections, we’ll explore how BitNet works, what sets it apart from traditionalarchitectures, and how software engineers, data scientists, and enterprise leaders can benefitfrom this new approach to scalable AI.

Core Innovation-What is 1-Bit Quantization?

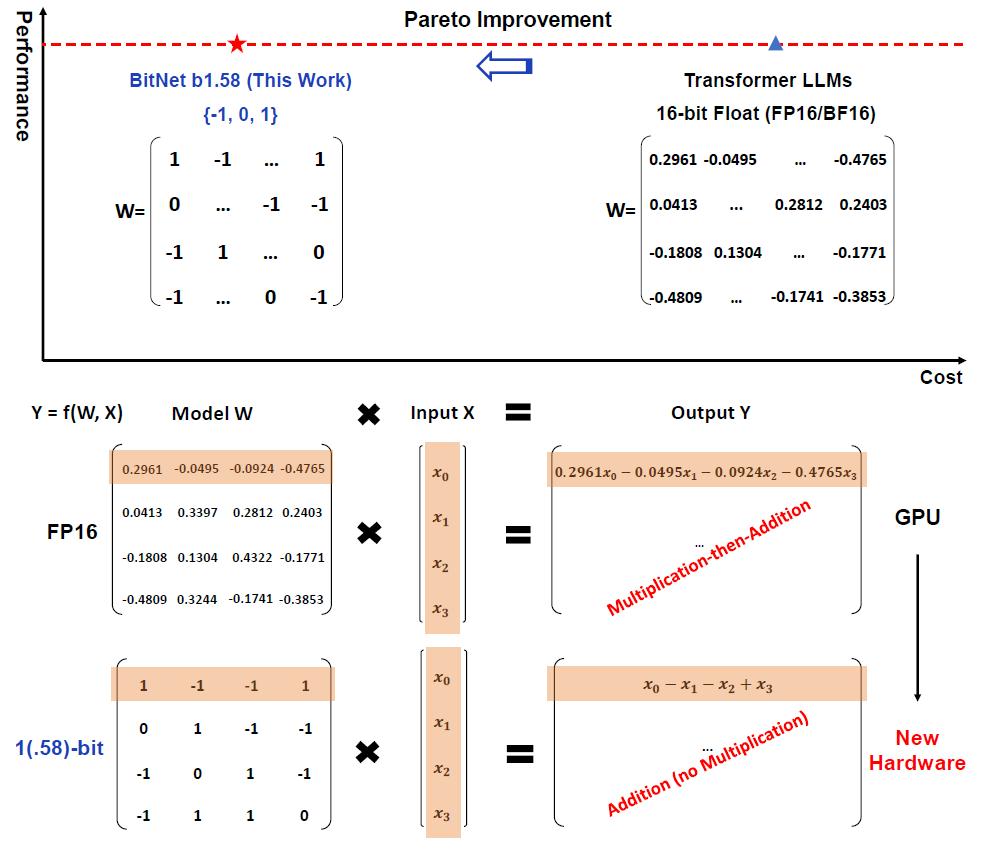

BitNet introduces 1-bit quantization, a bold step away from conventional floating-pointarchitectures like FP16 or BF16, which are common in today’s large-scale language models(LLMs). Traditional AI models use higher-precision representations to encode neural networkparameters, consuming more memory and energy. BitNet, however, transforms these weightsinto a ternarysystem,each parameter being either-1, 0, or 1.

This shift to low-bit precision directly reduces the computational load and memory footprint,making deployingadvanced LLMs on smaller devices with fewer resourcespossible. Thedesign leverages BitLinear layers, a drop-in replacement for conventional matrix multiplication inTransformer models, to handle these 1-bit weights efficiently.BitLinear operations also reducethe number of floating-point multiplications, minimizing latency and power consumption duringinference.

Why Does 1-Bit Precision Matter?

1-bit quantization achieves significant memory savings and speed improvements withoutsacrificing performance. Compared to 8-bit or 4-bit quantization, which still reliesheavily onpost-training optimizations, BitNet’s quantization-aware training ensures that themodel is optimized from the start. This approach yields higher stability and accuracy across various NLPtasks, such as language generation and text classification.

Developers who previously struggled with the infrastructure costs of hosting large modelscannow deploy scalable solutions with fewer hardware requirements. This makes edge computingviablefor running complex AI workloads, expanding opportunities to apply AI in mobile devices,IoT systems, and low-latency environments.

BitNet’s design also simplifiesfine-tuningand integration with existing models. For instance,pre-trained models based onHuggingFaceorLLaMAcan easily adopt the1-bit frameworkwith minimal changes to their architecture, enabling faster deployment in productionenvironments.

By focusing onscalable, lightweight AI, BitNet represents a major step forward for the broaderAI community, empowering companies todeliver AI services at reduced costswithoutcompromising quality.

Performance Benchmarks and Practical Benefits

The true power of BitNet lies in the practical performance gains it offers. Microsoft hasdemonstrated that 1-bit quantization reduces the memory footprint andprovides significantspeed and energy efficiency improvements over traditional architectures. Comparedto FP16-based models, the industry standard,BitNet models use up to 7x less memoryand achieve4x faster inference times.

Key Performance Gains

1.Inference Speed: BitNet’s faster inference time allows AI systems torespond in realtime, even on devices withlimited hardware capabilities. This makes it ideal forlow-latency applicationslikevirtual assistants, recommendation engines, andchatbots.

2.Energy Efficiency: BitNet models consume far less energy w ith reduced floating – point operations. Tests show tha t BitNet achieves 71.4x lower energy consumption per matrix multiplication th a n FP32 – based models, aligning with the growing demand for sustainable AI solutions .

3.Model Scaling Without Sacrificing Accuracy: BitNet’s models scale smoothly to mo re significant parameter counts while maintaining competitive accuracy . For example, a 13B BitNet model can outperform a 3B FP16 model while consuming fewer computational resources, demonstrating the scalability of 1 – bit LLMs .

These benefits makeBitNet attractive to organizationslooking to integrate advanced AI whileminimizing operational costs.Cloud-based deployments, which typically incur significantinfrastructure expenses, can be made more affordable through BitNet’s optimized models.

Impact on Edge and Mobile AI Deployment

BitNet enables the deployment ofLLMs on smaller, resource-constrained devices, such assmartphones and IoT sensors. This creates new possibilities foredge AI, where real-timedata processing is essential,but large models cannot typically run due tohardware limitations.With BitNet, developers can now buildmobile applicationsthat leverage the full power ofLLMs without requiring high-end GPUs or cloud access.

In sectors like healthcare and retail, thisopens doors to on-device AI solutions, such aspersonalized customer interactions or diagnostic assistants that operate independently ofcentralized servers. Enterprises can reduce dependence on high-cost infrastructure whiledelivering powerful AI-drivenfeatures to end users.

This ability to run complex models on local devices represents a significant shift towarddecentralized AI—a trend aligningwith privacy requirements and cost-efficiency goals acrossindustries.

Impact on Edge and Mobile AI Deployment

BitNet enables the deployment ofLLMs on smaller, resource-constrained devices, such assmartphones and IoT sensors. This creates new possibilities foredge AI, where real-timedata processing is essential,but large models cannot typically run due tohardware limitations.With BitNet, developers can now buildmobile applicationsthat leverage the full power ofLLMs without requiring high-end GPUs or cloud access.

In sectors like healthcare and retail, thisopens doors to on-device AI solutions, such aspersonalized customer interactions or diagnostic assistants that operate independently ofcentralized servers. Enterprises can reduce dependence on high-cost infrastructure whiledelivering powerful AI-drivenfeatures to end users.

This ability to run complex models on local devices represents a significant shift towarddecentralized AI—a trend aligningwith privacy requirements and cost-efficiency goals acrossindustries.

Impact on Hardware and Deployment Strategies

The introduction ofBitNet’s 1-bit architectureis not just a software innovation but a potentialparadigmshiftin hardware designand AI deployment strategies.Running large-scalelanguage models (LLMs) traditionallyrequiredhigh-end GPUs or cloud infrastructure, incurringsignificant costs for organizations. However,BitNet reduces these dependenciesbyoptimizing models to run efficiently on smaller or less powerful devices.

Optimized Hardware for 1-Bit Computation

One of BitNet’skey advantages is that it opens the door tohardware-optimized designsfor AIworkloads. Traditional hardware designed forfloating-point operationsmay not be fullyoptimized for BitNet’s 1-bit models. Microsoft envisionscustom chipsorlow-powerprocessorsthat maximize BitNet’s potential by focusing on integer-based operations andminimizing floating-point calculations.

This shift could have significant implications foredge computingandIoT devices, whereenergy efficiency and performance are paramount. As models grow in complexity, businessescan leveragespecialized low-power hardwareto bring advanced AI capabilities closer to thedata source, reducing latency and dependency on cloud infrastructure.

Lowering Cloud Costs and Operational Complexity

For enterprises relying on cloud services,BitNet significantly reduces operational expenses.Models trained with BitNetconsume less memory and run faster, meaning businesses can scaleAI solutions without proportional increases in infrastructure costs. This is particularly beneficial for companies deploying real-time services, suchas chatbots or personalized recommendationsystems, where latency and responsiveness directly impact user experience.

Additionally,multi-cloud strategiesbecome more feasible with BitNet. Since 1-bit modelsrequire fewer compute resources, enterprises canswitch between cloud providersmoreflexibly, optimizing for cost and performance without being locked into high-end configurations.

Enabling AI at the Edge and Beyond

With BitNet’s efficiency,mobile and edge devices—previously limited by processing power—can now runadvanced LLMs locally. This creates new possibilities across industries:

- Retail: Personalized shopping assistants powered by local AI models.

- Healthcare: AI diagno stic tools deployed on handheld medical devices , eliminating reliance on cloud servers.

- Manufacturing :Predictive maintenance models running directly on factory equipment , ensuring seamless operations with minimal downtime.

By reducing the reliance on high-performance cloud-based resources,BitNet decentralizes AI.Italigns withprivacy-focused trends in industries like finance and healthcare,where data cannotbe easily offloaded to external servers.

BitNet’sflexibilityandhardware compatibilityallow developers to explore these newdeployment scenarios without major architectural changes, ensuring thatresource constraintsdonot hinderinnovation.

With these advantages, BitNet promises afuture-proof AI strategythat balances performance,sustainability, and scalability across variousdeployment environments.

Seamless Integration and Adoption Across Ecosystems

One of BitNet’s biggest strengths is itsease of integratingwithexisting AI frameworks.Microsoft designed BitNet to becompatiblewith open-source platforms, such asHuggingFace modelsandLLaMA architectures, allowing developers totransition smoothlyto 1-bit LLMs without significant pipeline changes. This design ensures thatengineers anddata scientistscan adopt the framework without re-engineering existing solutions, acceleratingthe deployment of optimized models.

Quantization-Aware Training for Robust Performance

Unlike post-training quantization approaches, which often lead toperformance degradation,BitNet uses quantization-aware training (QAT). This means the model learns tooptimizeperformance with low-precision parametersright from the beginning, ensuringhighaccuracyeven in 1-bit configurations. For developers, QAT eliminates theguesswork involved in fine-tuningmodels after training, providing better results across a variety oflanguage tasks.

This makes BitNet especially useful for enterprises working onNLP-based solutionssuch as:

- Chatbots that require real – time responses with low latency.

- Customer service automation AI diagno stic tools deployed on handheld medical devices , eliminating reliance on cloud servers.

- Recommendation engines Predictive maintenance models running directly on factory equipment , ensuring seamless operations with minimal downtime.

Withtools available through Microsoft’s GitHub repository (BitNet on GitHub), developerscan experiment with and deploy BitNet models alongside existing solutions with minimal friction.Pre-trained modelscan be fine-tuned or adapted to specific use cases using BitNet’slightweight framework, making it anattractive optionfor businesses seeking rapid AIinnovation without excessive infrastructure changes.

Encouraging Adoption Through Open-Source Collaboration

BitNet’s integration withopen-source platformsensures the community cancontributeenhancementsand collaborateon further optimization techniques. This openness fostersfaster innovation and helps developers identifynew applicationsfor 1-bit LLMs acrossindustries.

As organizations adoptmulti-cloud strategiesto optimize AI deployment costs, BitNet’slowresource consumptionensures portability acrosscloud providers. Developers no longerneed to worry about specific hardware or architecture dependencies,making it easier to shiftworkloads between providers without compromising performance.

BitNet’s seamless integration capabilities and community-driven developmentmake it a keydriver ofaccessible AI innovation, encouraging organizations of all sizes to experiment withand adoptscalable, efficient models.

Conclusion

BitNet marks a significant leap forward inscalable, efficient AI development, addressing themost pressing challenges of deploying large language models (LLMs): high computationalcosts, memory consumption, and energy inefficiency. By leveraging 1-bit quantization andintroducing BitLinear layers, Microsoft has reimagined how AI models can perform optimally withminimal resources.

Fordevelopers and enterprise leaders, BitNet offerstangible benefits—faster inference,reduced operational costs, and easier deployment acrossvariousenvironments, includingedgedevicesandcloud platforms. This makes BitNet an ideal solution forreal-time applicationslike chatbots, recommendation engines, and mobile AI tools, which requirelow-latencyperformance without sacrificing accuracy.

Empowering a New Wave of AI Innovation

By embracingopen-source principles, Microsoft has ensured that BitNet’s benefits areavailable to the broader AI community. Its compatibility withHuggingFace modelsandLLaMAframeworksfacilitatesrapid adoption, empowering businesses to buildnext-generation AIsolutionswithreduced hardware and infrastructure dependencies.

This allows evensmall enterprises and developersto compete in the AI landscape, as theycan now accesshigh-performance AIwithout incurring prohibitive costs.

A Path Toward Sustainable AI

Asorganizations increasingly prioritize sustainability, BitNet offers a viable path forward bydrastically reducingenergy consumption. Its ability to run advanced models onlow-powerdevicesaligns with the demand forenvironmentallyfriendly AI deployments. This helpsorganizations meetgreen computing goals andensures that AI innovations can scale globallyacross diverse environments, from urban data centers to remote edge locations.

Looking Ahead

With BitNet’s release on GitHub, Microsoft has paved the way for further exploration of 1-bitarchitectures. Future developments may involve specialized hardware optimizations tailored to1-bit models and the exploration of new AI use cases that leverage this unique architecture. Asdevelopers experiment with BitNet’s framework, we can expect the emergence of even moreefficient AI solutions, reshaping how AI interacts with business, technology, and society at large.

In this era ofaccessible, cost-effectiveAI, BitNet stands out as acatalyst for change,encouraging innovation that is bothsustainable and scalable.

For anyone looking to leverage AI—whether to build better customer experiences or optimizeinternal processes—BitNet provides the tools and framework to do so efficiently andresponsibly.This combination ofperformance, sustainability, and accessibilitypositionsBitNet as a transformative force in the future of AI.

Want to discuss BitNet or consult with theAIIQ team onhow you can deploy this for yourcompany?

Please reach out to us or comment below.

Explore the framework:

● BitNet on GitHub

● Insights from IEEE Spectrum